Animation for Sound

Human-Cyborg Relations converts static sounds into dynamic, immersive audio experiences. All proceeds support FIRST.

I am Michael Perl. I am a nine-time founder with a degree in Music, Science, and Technology from Stanford.

In 2020, I accidentally made a landmark discovery: R2-D2 has a long-lost codified language.

Before Star Wars, depictions of robots spoke in human language. Legendary sound desinger Ben Burtt developed the first "binary" voice for R2 using beeps and whistles. It instantly became one of the world's most iconic, universally beloved, and memorable examples of experimental sound design. But no one besides Ben knew how deep it went.

For all the attention paid to Star Wars’ visual design, no one could articulate how R2’s voice became a cultural phenomenon that still resonates half a decade later.

As an audio enthusiast and archivist, I set out to answer this question. I connected with Lucasfilm master droid builder Lee Towersey and reverse engineered R2-D2's speech. As it turns out, R2's voice is striking because he actually speaks with a thoughtful, emotional, codified language. And that speech process can be recreated. From this revelation spawned the R2-D2 Vocalizer: a language model that generates organic, infinitely varied R2-D2 speech in realtime.

I found that this same design process pioneered by Ben Burtt could be used to covert static sounds of any kind into animated, organic, infinitely varied audio experiences. Thus, Human-Cyborg Relations was born.

When audio and visual experiences work in concert, that's when magic happens. Walt Disney knew this well. He called them Audio-Animatronics for a reason.

Human-Cyborg Relations is used by thousands of droid builders (including Lucasfilm creature team members) for live experiences. However, the technology is adaptable to any environment. I would love to see the hardware and software get more use in Disney parks, at conventions, and in the development of new droid voices.

Please note: Human-Cyborg Relations was simply a passion project — for historical and charitable benefit — that I undertook between startups. While I do my best to revisit it periodically, I unfortunately do not have the bandwidth to help with implementation or troubleshooting questions. Kindly direct those to droid building forums and Facebook groups. For other inquiries, please contact me.

Powered by advanced research into the previously undiscovered, codified language of R2-D2.

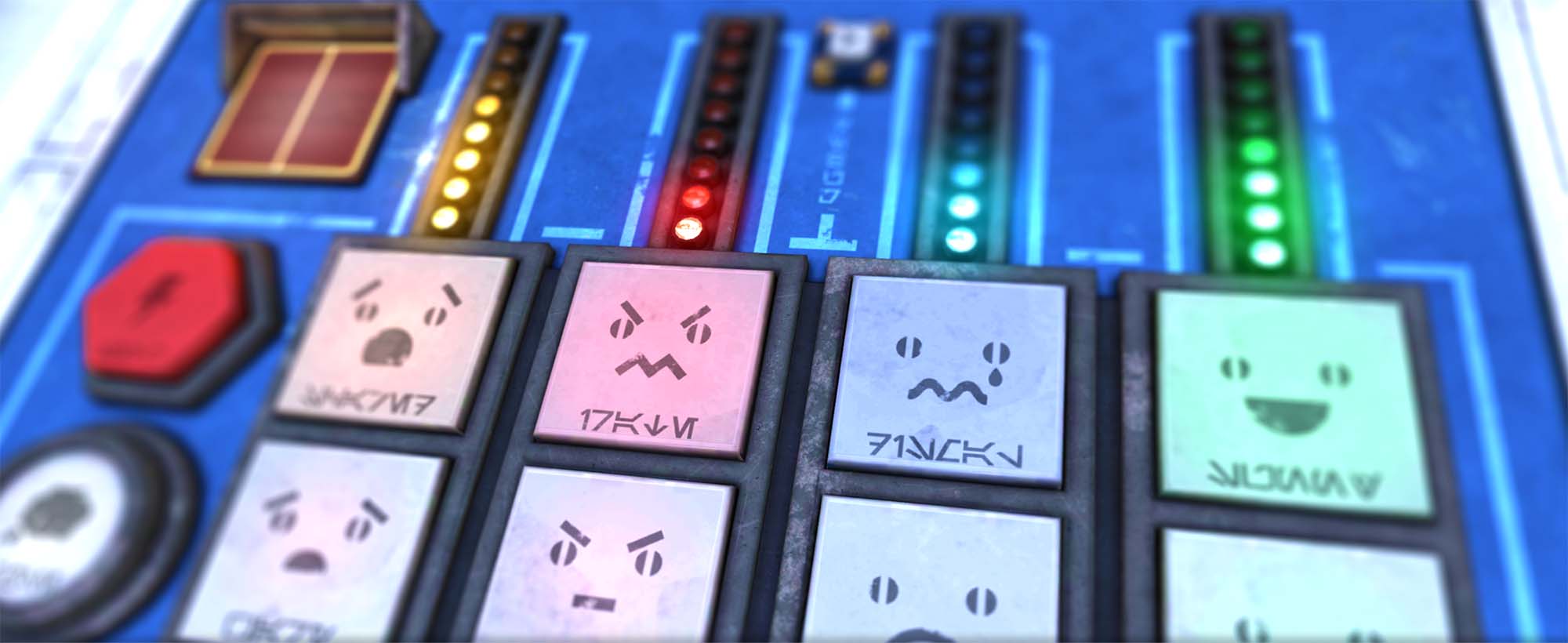

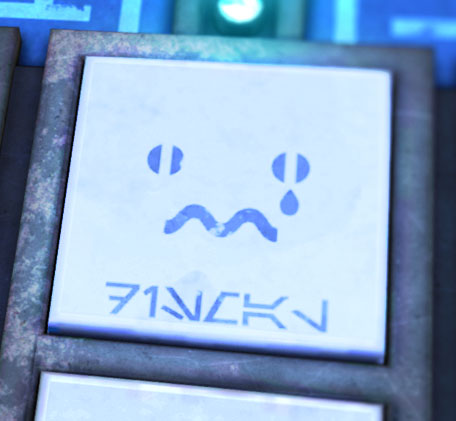

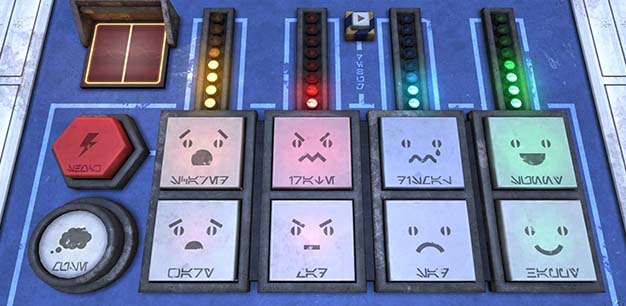

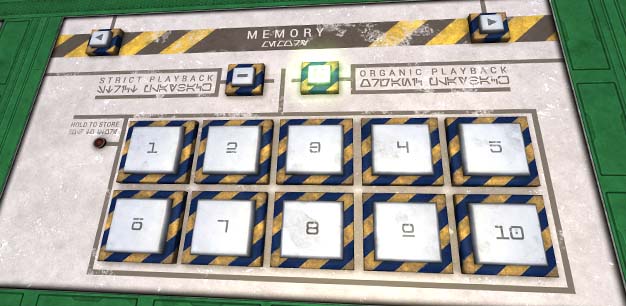

This language model generates emotionally and grammatically thoughtful R2-D2 vocalizations. Billions of rules dictate how each phoneme should be selected, processed, and sequenced to achieve emotional and canonical accuracy.

Design your own astromech.

Create a droid that communicates only in beeps, or that never whistles. A soprano. A bass. A frenetic fast talker.

Tailor a personality that governs how your droid responds to stimuli... tendences that evolve as the droid learns, grows, and develops a living memory.

Gonks are famous for speaking just one word. Only a handful of variations on that word were originally recorded.

Using individual phonemes from those original recordings, the Gonk Vocalizer synthesizes brand new Gonk vocalizations in realtime. Hear a Gonk express itself with infinite, organic variety. No two "Gonk"s will ever sound quite the same.

Acoustically tuned sound systems for small, suboptimal spaces.

This suite of speakers, enclosures, and digital signal processing is tailored to the extreme space and positioning constraints of droids. Produce deep bass, flat frequency response, and wide dispersion that sounds spectacular to audiences in any listening position.

Presenting, for the first time ever, the raw isolated audio of Leia's Hologram Message.

The audio was separated using a combination of vocal isolation algorithms and manual spectrogam editing.

Existing voice processing apps are slow and low quality. They all have the same limited feature set because they avoid the most complex (and interesting) voice effects.

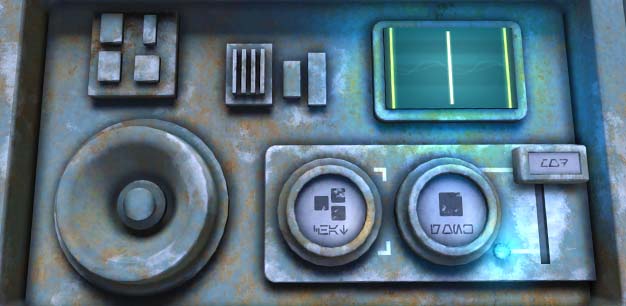

Human-Cyborg Relations modulators include a D-O transformer. A filter bank matches the timbre of D-O's voice, and an adaptive delay chain — the first of its kind — recreates his stutter.